The largest online community of AI developers and researchers, Hugging Face contains hundreds of thousands of machine learning models, datasets, and demo applications with AI support. Today, it is one of the most important hubs of data, tools, and infrastructure for developing and scaling both commercial and open-source AI projects. Discover what Hugging Face is used for, its key components, and how it’s shaping the AI industry.

Core Tools: Models, Datasets, and Libraries

Hugging Face is a platform that brings together a rich ecosystem of tools and resources for building AI applications. It supports everything from training machine learning models to deploying them, making it a go-to choice for both researchers and developers who want flexible and scalable solutions.

To get a clear idea of what Hugging Face is and how it works, it helps to look at its main parts: Model Hub, Transformers, Datasets, and Spaces. This platform stands out because it offers open access to thousands of pre-trained models and datasets, enabling users to build and fine-tune AI applications without starting from scratch.

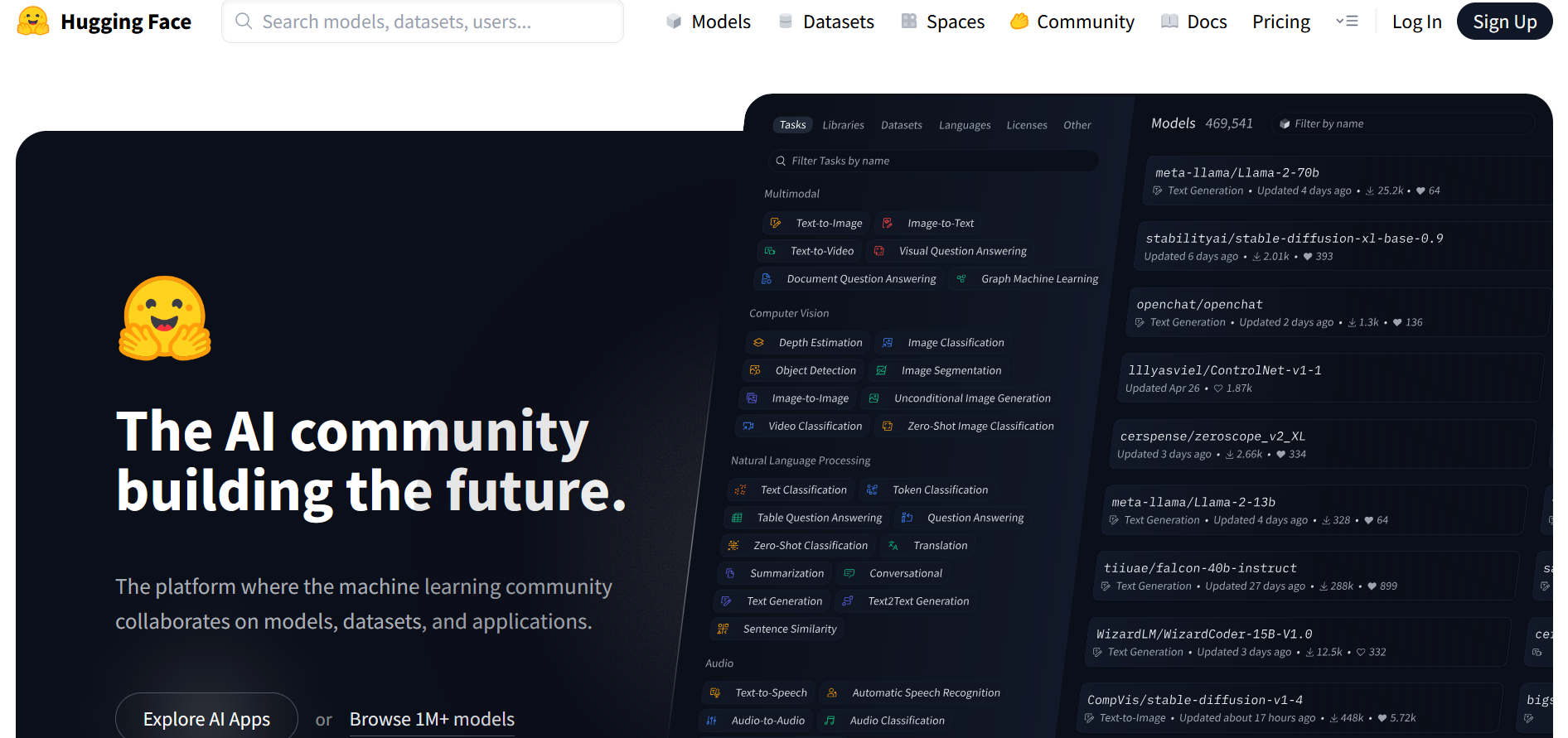

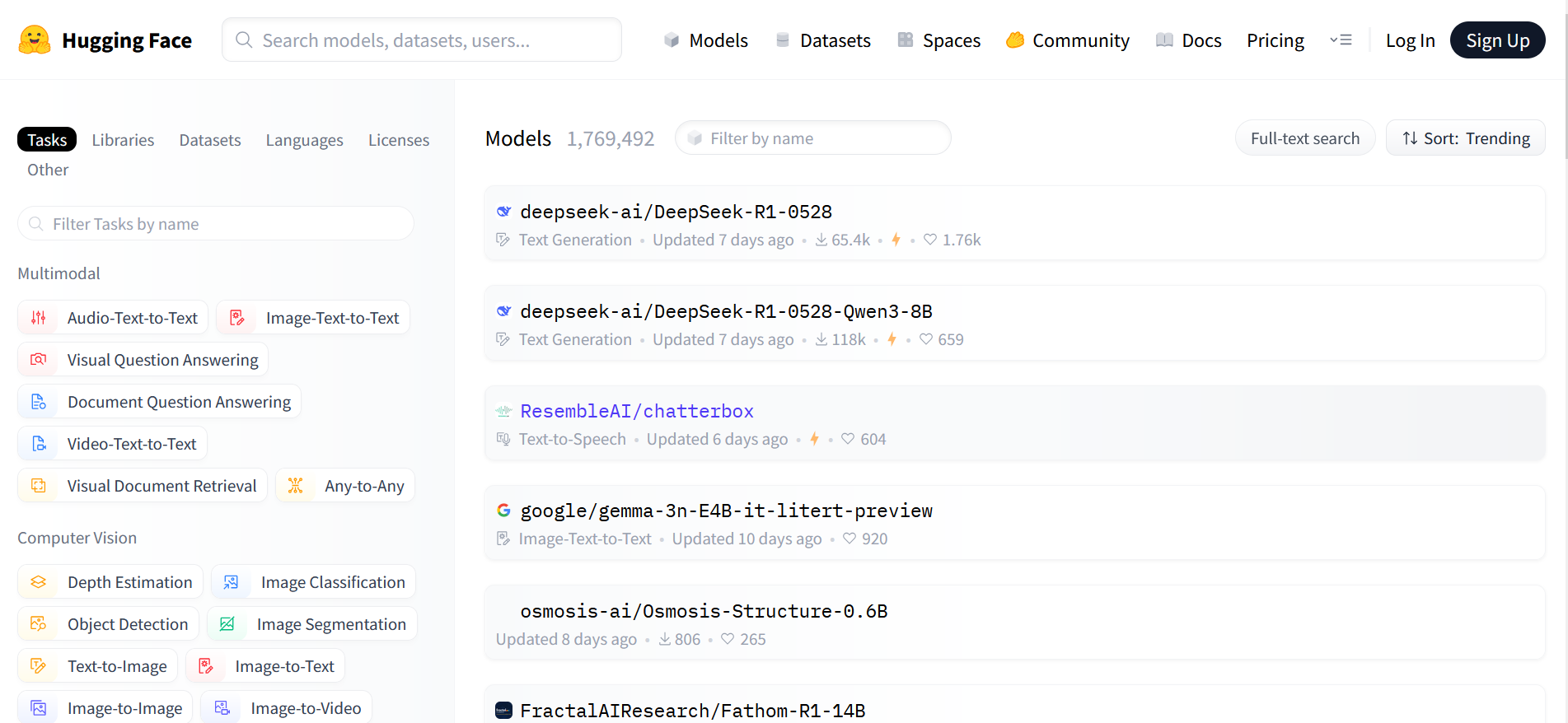

Model Hub

Model Hub is the largest online database of machine learning models. Its library contains more than 1.7 million models of different types and purposes. Developers can use them, as well as upload their own LLMs for quick access, deployment, and sharing. This allows not only the use of ready-made solutions but also the sharing of their own developments, contributing to the growth of the community and the expansion of the open ecosystem.

Finding the right models is straightforward with this platform’s handy search tools. You can filter by different criteria, download models, or even deploy them in the cloud. This makes it very easy for developers to quickly locate the perfect solutions and smoothly add them to their projects, which really speeds things up when building AI systems.

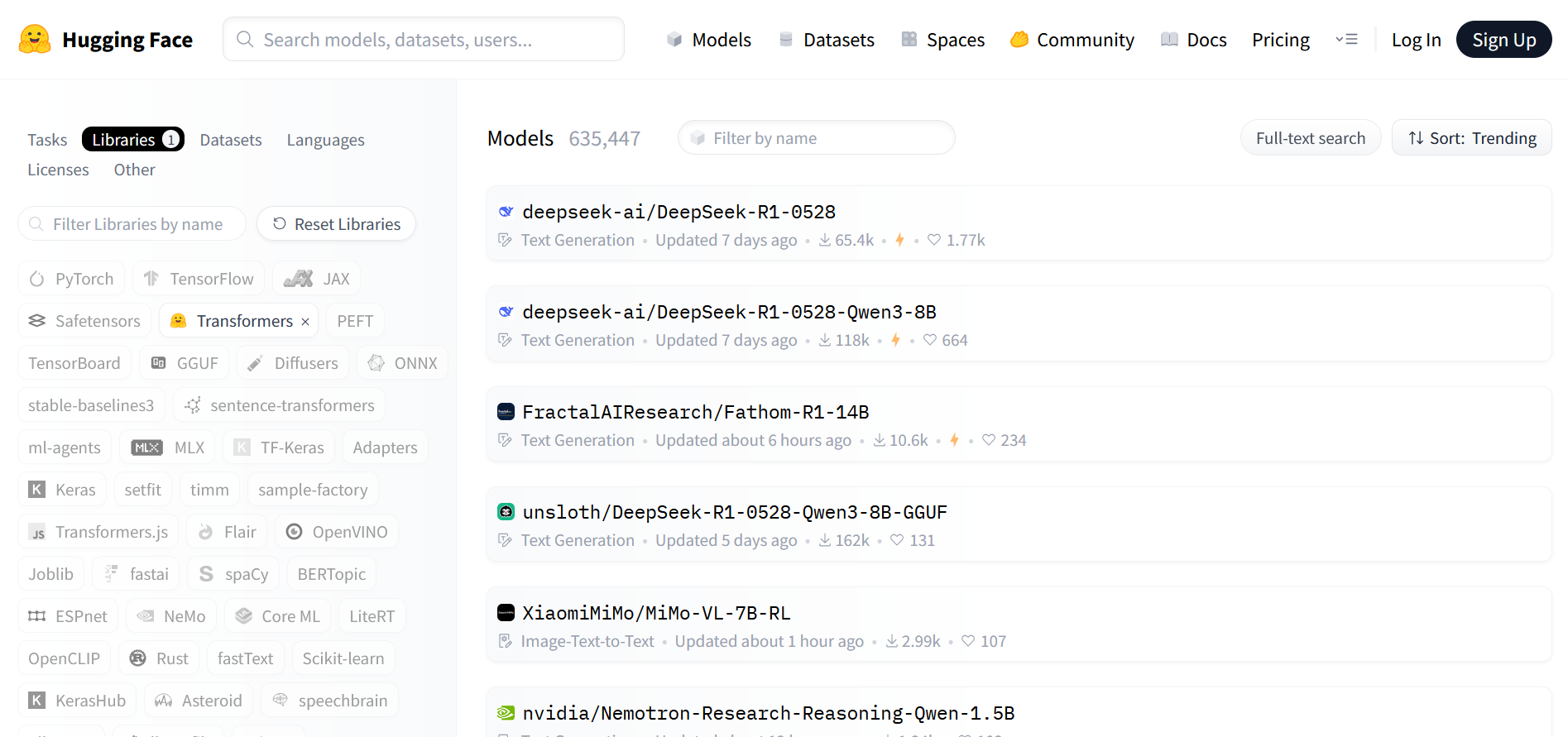

Model Hub is tightly integrated with the Transformers library of pre-trained and tuned models, as well as dozens of other ML libraries hosted on the Hugging Face platform. This architecture increases compatibility between components and opens up broad opportunities for model reuse across projects and environments.

Transformers

The flagship Hugging Face Transformers library contains more than 630,000 state-of-the-art (SOTA) language models of various types (including multimodal). They are designed for text, image, and video generation, as well as computer vision and other tasks. Developers can deploy them in applications, as well as use them to train and fine-tune custom models based on their own datasets.

The library supports dozens of popular architectures, including BERT, GPT, T5, CLIP, and others. This gives developers the opportunity to cover a wide range of tasks, from sentiment analysis to code generation. A single interface and extensive documentation allow them to easily switch between models, adapting them to specific cases without having to dive deep into the implementation details of each architecture.

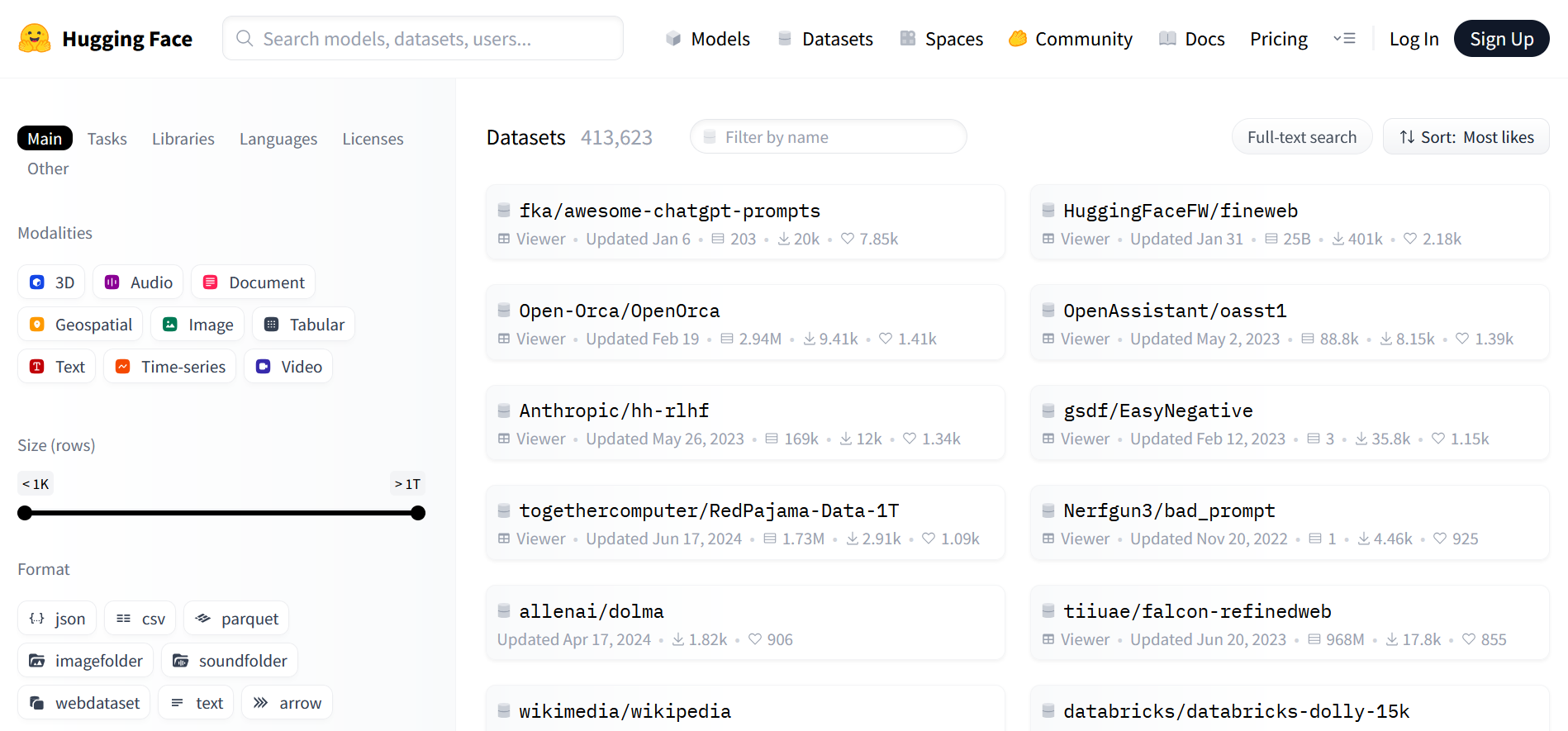

Datasets

Hugging Face offers over 400,000 datasets to help developers successfully train and test various machine learning models. These datasets cover a wide range of tasks: text generation, analysis and translation, image classification, automatic speech recognition, and much more. Thanks to such a quantity and diversity, developers can select suitable data for training neural networks and integrating models into third-party programs and products.

Each dataset comes with a clear info card that shows what’s inside, how big it is, its format, and the type of license it has. This makes it easy to quickly see if the dataset fits what you’re looking for. Furthermore, users can preview sample data for some datasets right in the browser, so you can get a feel for how it’s organized without having to download anything. This significantly simplifies the selection and speeds up the preparation of data for training and testing models.

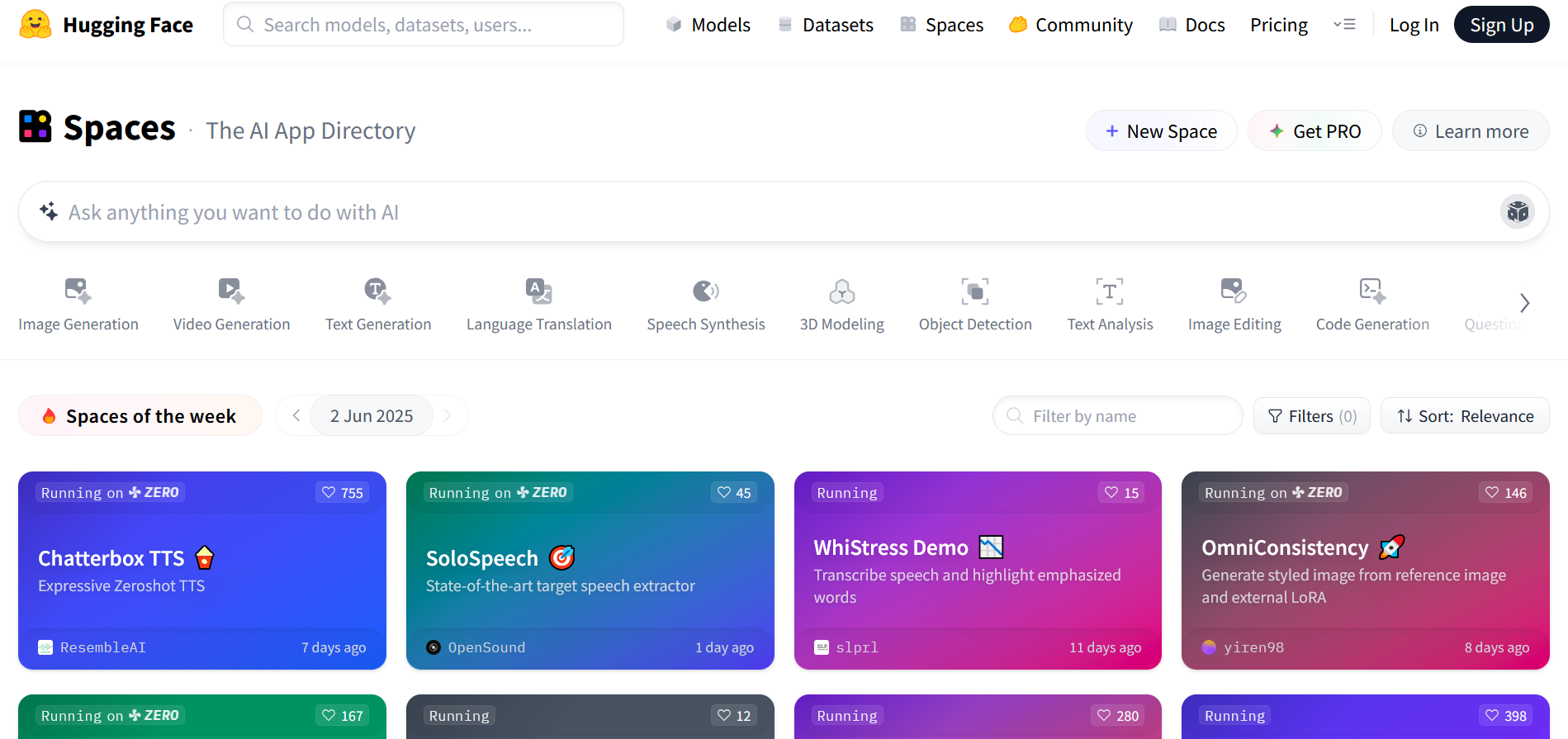

Spaces

The native Spaces service is designed to deploy applications involving machine learning models, as well as to store and share finished software. With its help, developers can publish demo versions of applications (spaces) in personal or corporate accounts, which simplifies the demonstration and testing of AI solutions.

Spaces supports multiple SDKs, allowing you to create Python applications as well as static projects using HTML and JavaScript. This flexibility makes the platform suitable for developers of all levels, from beginners to experienced professionals.

The Spaces section of the Hugging Face platform features over 600,000 demo applications with machine learning algorithms for text, audio, and video generation, speech synthesis, 3D modeling, and other tasks. Among them, a special place is occupied by AI Factory Hugging Face — an online comics generator based on artificial intelligence, demonstrating the creative capabilities of the platform. Spaces creates a vibrant community where users can not only deploy their projects but also be inspired by the ideas of others, contributing to the development of the open-source AI ecosystem.

Practical Implementation: Using the Transformers Library

Developers looking for how to use Hugging Face in real-world projects should start with the Transformers library, one of the main components of the platform. It provides access to thousands of pre-trained models: LLM and Vision-Language Model (VLM). Each available for download or integration with third-party applications via API. The platform supports fine-tuning of Transformers models, their retraining, deployment, and performance analysis using PyTorch, TensorFlow, and JAX frameworks.

The models presented in this library are capable of performing a wide range of tasks:

- answering questions while taking into account the context;

- creating summaries from voluminous documents;

- text classification and generation;

- searching for specific data in texts (token classification).

Transformers models can automatically recognize speech and convert it to text, classify audio recording characteristics (language, speaker, etc.). In addition, they can segment images and identify objects within them, as well as learn through reinforcement. Many LLMs in the database are multimodal – they can work with several types of data simultaneously (text, images, video).

To understand how to use Hugging Face models from the Transformers library, it is important to familiarize yourself with the basic tools that make interacting with them easier:

- The Pipeline API is a tool for automating the execution of models and obtaining results from them. It allows you to automate a variety of tasks in different modalities, using any model from the Hugging Face Model Hub for these purposes. For each task, the recommended default LLM and preprocessor are provided, but these values are easy to customize. To automate a specific process, you need to specify the task ID, the selected language model, and send the corresponding request. The Pipeline API provides a wide range of additional parameters that allow you to flexibly configure the pipeline – from optimizing model performance to adapting to specific task requirements.

- The Trainer API is a tool for organizing the full cycle of training, fine-tuning, and evaluation of models created based on the PyTorch ML framework using user data. It automates key stages of training, including loading and preparing data, calculating metrics, saving checkpoints, and managing training cycles. To use its capabilities, you need to connect the selected model and preprocessor, as well as enter the parameters for training, retraining, or fine-tuning LLM. This API is especially useful when creating custom solutions that require adapting models to specific business problems or the specifics of user data. In the next section of our Hugging Face overview, we will describe in more detail the procedure for working with models using this tool.

- The Generate API is a tool for generating texts based on pre-trained language and multimodal models from the Transformers library. With its help, developers can connect any LLM or VLMs with generative capabilities presented in the Hugging Face Model Hub and receive text responses based on input data. The interface supports streaming generation and also offers various decoding strategies, allowing flexible control over the style, length, and variability of generated results. This approach simplifies the implementation of complex generative models in applied solutions and accelerates the development of AI products.

Each model from the Transformers library consists of three main elements: configuration, the model itself, and a preprocessor. Thanks to this approach, it can be easily used for both training and obtaining results (inference) via the API. Using pre-trained models from this library helps developers save resources and implement AI-based solutions faster.

Fine-Tuning, Evaluating, and Sharing Models

When looking into what Hugging Face does, it is recommended to pay attention to the key features of the platform that allow fine-tuning, evaluation, and sharing of ML models. These capabilities make working with models more flexible and efficient. They help developers create high-quality and tailored solutions by actively interacting with the community.

Fine-tuning

The Trainer API allows you to fine-tune any publicly available model from the Model Hub. It makes it easy to tailor a pre-trained language model for specific tasks like text classification, generating responses, or sentiment analysis.

Fine-tuning usually involves using smaller, more focused datasets rather than huge amounts of data. This method is much quicker and requires less computing power than training a model from the ground up, making it a great option when resources are limited or when working on very specific tasks.

Rating

The Evaluate library helps developers efficiently evaluate language models and datasets, offering dozens of metrics for different types of tasks — from natural language processing to reinforcement learning and computer vision. It simplifies the evaluation process by allowing you to use ready-made solutions without having to write your own code.

By visiting Evaluate, you can select the desired metric from a ready-made list, study its description and limitations, and use the interactive instructions for use. The library supports integration with other components of the Hugging Face ecosystem, including the Trainer API and popular datasets from the Datasets Hub. Thanks to this, the evaluation process can be easily integrated into the working pipeline and adapted to specific tasks.

Sharing

Hugging Face Model Hub allows developers to not only store their own ML models but also share them with other community members. They can also explore third-party solutions uploaded to the database. Each model repository has version control, visualization of differences, and commit history.

The version control system, based on Git and Git Large File Storage (LFS), supports revisions. This makes it easy to capture the state of the model and roll back to previous versions if necessary. With the gating system, LLM owners can control who has access to their models.

The output widget allows users to interact with the model directly in the Model Hub. Models are preferably provided in PyTorch, TensorFlow, and Flax formats, although other frameworks are supported. LLMs can be uploaded to the platform using the Trainer API, the Hub web interface, or alternative methods.

Leveraging the Ecosystem for Faster Innovation

Hugging Face has changed the game in the AI industry by making it easier and more accessible to develop and deploy intelligent solutions. A broad ecosystem of tools, open models, computing resources, and an active community accelerates innovation and lowers the barrier to entry into machine learning. This opens up new opportunities for both large companies and independent developers, making it easier to analyze, refine, and launch models in real-world conditions.

The key benefits of the Hugging Face platform that accelerate innovation and make working with artificial intelligence easier include:

- Streamlining AI product development. The platform reduces the complexity of the AI application development process, making it accessible not only to large companies but also to individuals. To this end, it provides hundreds of thousands of open-source LLMs, computing resources for their training and tuning, as well as APIs for deployment and other tasks.

- Ease of model and dataset analysis. Hugging Face capabilities allow you to effectively analyze machine learning models and datasets, evaluating them on a wide range of criteria in a no-code format. This simplifies and speeds up LLM performance assessment without requiring additional resource expenditures from developers.

- Support for prototyping and model deployment. With Hugging Face Spaces, developers can prototype LLMs and run demos of AI-powered applications through an integrated service. This enables streamlined model deployment and demonstration processes without requiring additional computing power.

- The role of the community in the development of the AI industry. Hugging Face is the largest online hub for AI developers and researchers. The number of its users has exceeded 5 million. The tools of this platform are used by more than 50,000 companies and organizations. In addition, it actively interacts with the leaders of the AI industry in the framework of joint projects. For example, in 2022, the platform participated in the development of the BLOOM model, and in 2024, in the creation of an AI online translator together with Meta and UNESCO.

Conclusion

Founded in 2016, the Hugging Face AI platform has become a catalyst for qualitative changes in the AI industry. Its solid ML library, Model Hub, large database of SOTA models, Transformers, dataset storage, and service for deploying AI applications, Spaces, make a significant contribution to the development of AI/ML technologies and the implementation of innovations. By uniting millions of developers and researchers, Hugging Face creates a space for fruitful cooperation and the implementation of new promising projects in the field of artificial intelligence.

Also read on our blog:

- Google Lead Form and Smartsheet Integration: Automatic Addition of Rows

- Perplexity AI: The AI-Powered Search Engine Revolutionizing Information Access

- Mistral AI: Revolutionizing Artificial Intelligence

- Mastering Cohere: A Guide to Advanced AI Language Models

- How to Integrate TikTok and Notion: Step-by-Step Guide

- CoreWeave: Next-Generation Cloud Infrastructure for GPU Computing