At the beginning of 2020, a new model of hybrid data management architecture emerged, combining the advantages of classic DWHs with the flexibility of Data Lake. It was called "lakehouse". The new system implements data structures and data management functions similar to those used in a data warehouse, directly on top of low-cost open cloud storage. One of the most famous examples of a lakehouse is the Databricks platform. In our article, we will look at what Databricks is and why it is in such high demand in the AI and ML industry. You will learn about its main functions and capabilities, features of the interface and ecosystem, as well as key areas of application of this product and the rules for its implementation.

What is Databricks

Databricks is an open, cloud-based platform for storing, analyzing, and managing large datasets, as well as deploying artificial intelligence solutions. It was released by a company of the same name founded in 2013, which previously introduced several other data systems using machine learning (ML) technologies: Delta Lake, MLflow, and Koalas. The position of Databricks CEO has been held by Iranian scientist and entrepreneur Ali Ghodsi since 2016.

The platform allows for the development of end-to-end ML solutions to automate various data operations, from discovery to production. The developers created Databricks based on their own open-source framework, Apache Spark. It functions as an analytics engine for processing large volumes of data, providing automatic management of IPython-style clusters and notebooks.

An important milestone in the product's development was its integration with the Microsoft Azure cloud service. A collaboration between Databricks and Microsoft resulted in a managed version of the system called Azure Databricks. This version provides Azure customers with full access to all software tools. Users can customize components with just a few clicks, streamline workflows, and utilize interactive tools individually or as a team.

The lakehouse platform simplifies and accelerates collaboration between engineers, data scientists, and business analysts. It is closely integrated not only with Azure cloud storage but also with big data processing resources such as Azure Data Lake Store, Azure Data Factory, Azure Synapse Analytics, Power BI, and others. Major competitors of Databricks include well-known data services such as Oracle Database, Amazon Redshift, SQL Server, MongoDB Atlas, Google BigQuery, and Snowflake Data Cloud.

Currently, developers are actively working on further developing their product and are interested in collaborating with colleagues in the AI and data science industry. To achieve their goals, the company regularly participates in major industry events. Recently, the Databricks conference 2024, Data + AI Summit, took place. The event was held from June 10 to 13 at the Moscone Center in San Francisco, California, USA. Key project employees, including co-founder and CTO Matei Zaharia, a Romanian-Canadian computer scientist, attended the conference. They presented papers, conducted sessions, and provided demonstrations for clients, partners, and other stakeholders.

Areas of Use

After getting a general idea of the lakehouse platform, you will have a completely logical question: what is Databricks used for? The high performance and functionality of this system allow it to be used in different areas to perform a wide range of tasks. The most popular among them are:

- Data Science. Working with data is one of the priority areas for using the system. It provides a set of powerful tools for discovering, annotating, and exploring data.

- Predictive analytics. The system is of interest to developers of machine learning models focused on predictive analytics.

- Real-time analytics. This platform can train models for real-time data analytics. In addition, it supports the creation of dashboards and visualizations.

- ETL. This three-step data management process is one of the key ones. The system's tools make it possible to create and control ETL pipelines designed to receive, transform, and load data into the warehouse.

- Machine Learning. Development and training of ML models is one of the key areas of the platform. Its tools and ecosystem provide a complete workspace for experimenting, training, deploying, and maintaining Databricks LLM. Support for popular ML libraries (TensorFlow, PyTorch, and others) plays an important role.

Main Components

Having understood the areas of use of a cloud hybrid platform for working with big data, it’s time to find out what Databricks does. This system has powerful, versatile functionality covering three main areas:

- SQL.

- Data Science and Engineering.

- Machine Learning.

Let's look at each direction in more detail.

SQL

Databricks SQL is a specialized area of the Databricks platform designed for data analysts and developers looking for powerful tools for working with SQL queries in the cloud. This module allows you to run complex analytical queries on large volumes of data with high performance and minimal latency. For example, analysts can quickly process transactions with millions of records to identify sales trends in real time. It allows users to easily create interactive dashboards and reports that help them identify business trends and make informed decisions. Additionally, Databricks SQL integrates with major cloud data stores (such as AWS S3 or Azure Blob Storage), providing continuous data access and resource optimization.

Data Science and Engineering

The Data Science and Engineering track at Databricks provides end-to-end solutions for data scientists and engineers, simplifying the process of developing machine learning and deploying analytics applications. The platform supports the full cycle of working with data – from data collection to analysis and visualization, providing integration with multiple data sources and automation of routine operations.

Among the tools that Databricks offers for these areas are:

- Apache Spark – for processing big data.

- Delta Lake – for real-time data management.

- MLflow – for machine learning lifecycle management.

Databricks allows you to transfer batch data to the Azure cloud space. In addition, the platform provides the ability to stream data in real time using Apache Kafka, Event Hubs, and IoT Hub modules. This significantly expands the capabilities of analytics and engineering. For example, companies can automate the processing of social media data in this way to predict consumer trends. Another example of using the system in this direction is the creation of models for predicting customer churn in telecommunications based on the analysis of user behavioral data.

- Automate the work with leads from the Facebook advertising account

- Empower with integrations and instant transfer of leads

- Don't spend money on developers or integrators

- Save time by automating routine tasks

The Databricks platform offers easy collaboration tools available in real-time to the entire project team. They significantly speed up iterations and improve the quality of research. The introduction of technologies such as automatic scaling and resource optimization makes Databricks an ideal system for solving complex problems in the field of Data Science and Engineering.

Machine Learning

Machine Learning on the Databricks platform provides powerful tools for developing and scaling machine learning models. They support the entire process cycle – from data preprocessing to deployment. Particularly noteworthy is the integration with popular libraries: TensorFlow, PyTorch, Keras, and XGBoost. This allows developers to train models manually or using AutoML.

With MLflow, developers can track training parameters, create feature tables, and manage the lifecycle of models, including their deployment and maintenance through the Model Registry. The high functionality makes it possible to use Databricks to create recommendation systems, process huge amounts of data for personalized offers, or train deep neural networks for pattern recognition.

The integration with Apache Spark enhances the power of Databricks by enabling the use of scalable machine learning algorithms for the most efficient data processing. It ensures speed and efficiency at all stages of working with models.

Ecosystem

Databricks is a holistic data ecosystem focused on innovation and collaboration in analytics, machine learning, and data science. This platform combines the capabilities of Apache Spark (for big data processing), MLflow (for machine learning lifecycle management), and Delta Lake (for reliable lakehouse data storage). All of this makes Databricks a truly powerful tool for companies seeking to actively transform digitally.

An important component of the platform ecosystem is Databricks Marketplace, based on the open Delta Sharing standard. It contains a solid base of datasets, AI and analytics resources, including notebooks, apps, machine learning models, dashboards, end-to-end solutions, and more. Developers do not need their own platform, expensive replication, or expensive ETL to use them. The marketplace allows you to process and analyze data faster and more efficiently and also avoid being tied to service providers.

Key Features

Among the main features of the platform are:

- Unified workspace. Databricks is a unified environment for storing, processing, and analyzing large volumes of data. It contains tools for collaboration between individuals and teams in real-time. They help participants share information and resources, making tasks easier and faster.

- Automation. The platform effectively automates a number of operations, including cluster creation, task scheduling, and scaling. This makes it easier and faster for developers to create, deploy, and manage datasets and ML models.

- Synchronization with the Azure ecosystem. Databricks is fully integrated into Microsoft Azure cloud storage, which expands its basic capabilities. Users have access to various ecosystem services such as Azure Blob Storage, Azure Data Lake Storage, Azure SQL Database, and more, allowing them to synchronize data with these services.

- Scalability. The platform, developed based on the open-source Apache Spark framework, has decent scalability. It is capable of processing multiple large datasets in parallel and successfully performing complex analytical tasks.

Interface

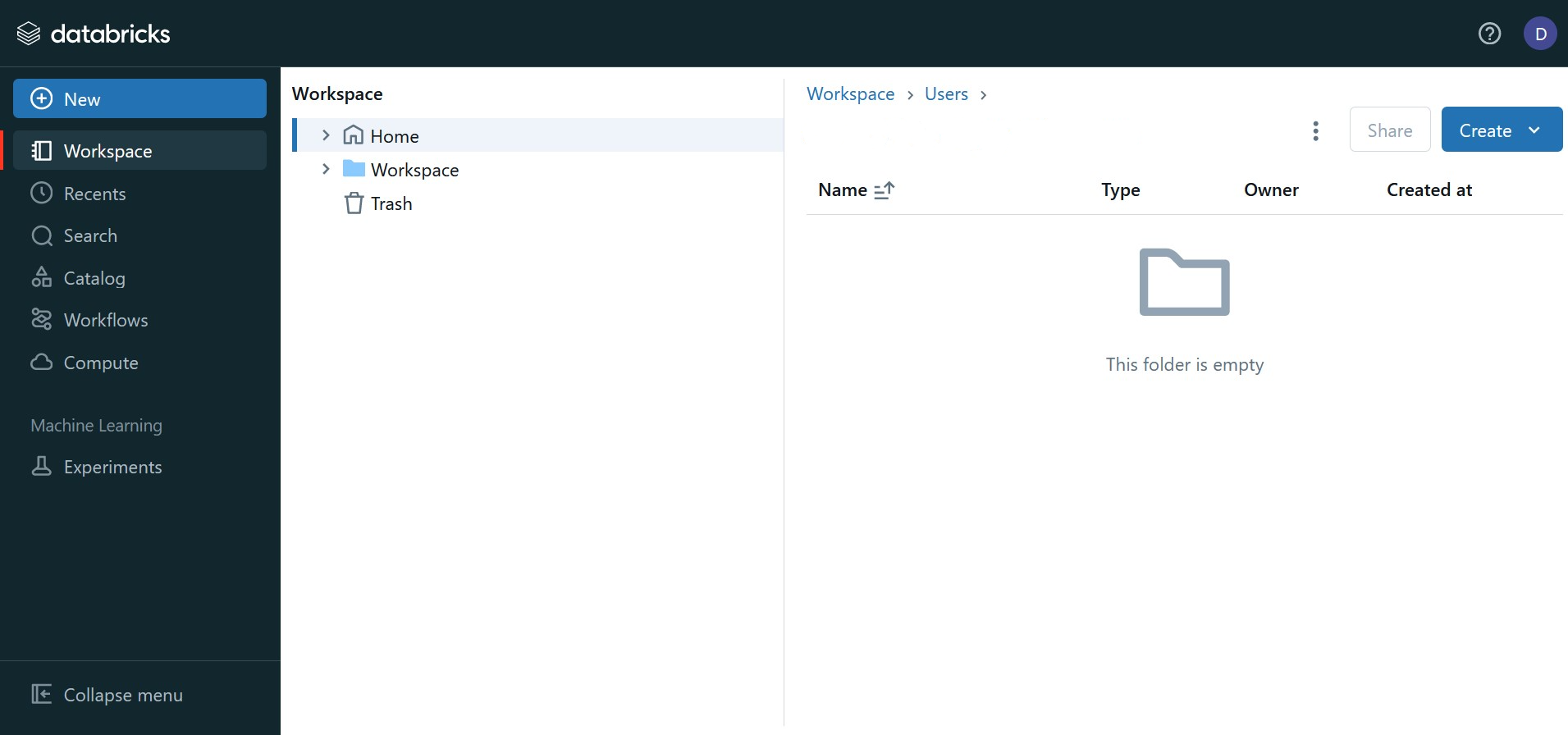

The core of the Databricks user interface is the Workspace. It provides access to all platform resources and organizes data into categories.

The workspace consists of the following components:

- Dashboard. This section contains tools for data visualization.

- Library. Packages for tasks running on the cluster are stored here. In addition, developers can add their own libraries here.

- Repo. The data in this section is synchronized with the local Git repository and merged into one version.

- Experiments. Here you can find information about MLflow runs carried out during the ML model training process.

To take full advantage of Azure Databricks tools, you don't need to migrate your data to your own storage. Instead, it is enough to launch the integration of the platform with an external cloud account. After this, Azure Databricks will deploy its computing clusters based on the resources of a third-party service for processing and storing data. If necessary, you can control data access permissions using SQL syntax by using the Unity Catalog feature.

System tools are available through the user interface (UI), API, and command line (CLI):

- UI. Most features are almost one click away from the Azure Portal. Data processing, analysis, and protection services are also located there.

- REST API. There are currently two versions of this interface: REST API 1.2 and an updated version of REST API 2.0 with additional functions.

- CLI. The command line interface is available on GitHub. You can connect to it via REST API 2.0.

How to Use Databricks

To use Databricks effectively, follow these steps:

- Preparing the workspace. First, sign up for an Azure Databricks account and create a workspace in it. To do this, follow the recommendations specified in the documentation on the project website.

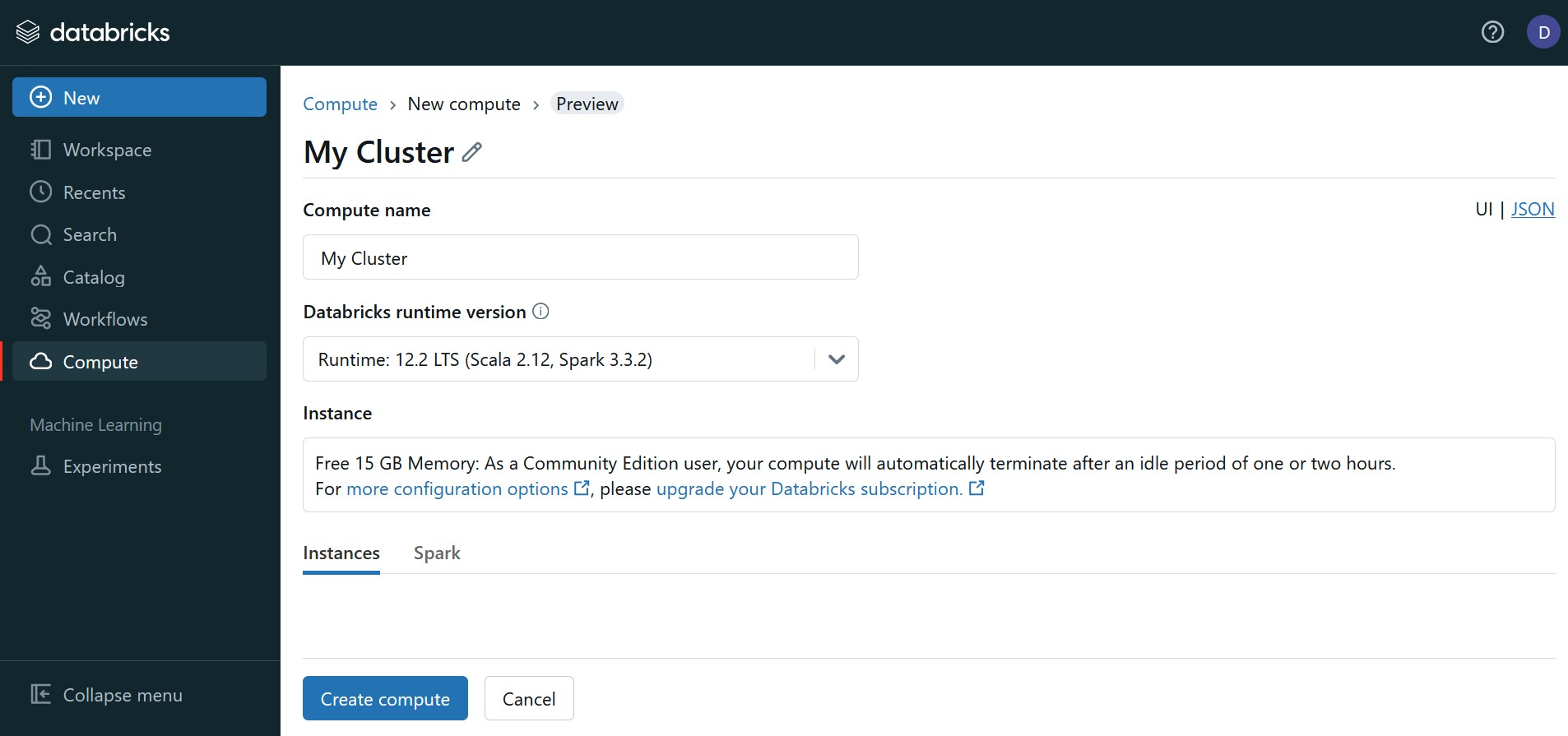

- Creating a cluster. Proceed to develop a cluster (a set of nodes used to process data and perform tasks). The platform allows you to create clusters manually or automatically, as well as manage them.

- Import data. Bring data from external resources into your workspace. The system supports various data sources, including Azure Blob Storage, Azure Data Lake Storage, and Azure SQL Database. Instructions for importing data are provided in the platform documentation.

- Data processing. Assign system tasks to process and study the data loaded into it. Use tools to transform, clean, and visualize them.

- Model training. After successful data preparation, you can begin the final stage – developing, testing, and deploying ML models. Detailed guidance on all aspects of this process can also be found in the documentation.

Pricing

Databricks pricing is based on a pay-as-you-go model: users only pay for the services they require, with per-second accuracy. They have the option to select a cloud service provider to utilize the system's tools: Azure, AWS, or Google Cloud. Each provider offers a 14-day free trial.

Basic rates:

- Workflows – starting from $0.15/DBU (Databricks unit).

- Delta Live Tables – starting from $0.2/DBU.

- Databricks SQL – starting from $0.22/DBU.

- Interactive Workloads – starting from $0.4/DBU.

- Mosaic AI – starting from $0.07/DBU.

Conclusion

Databricks AI platform is a powerful multifunctional resource that has proven itself in the development of projects involving artificial intelligence and machine learning. The tools it offers are in high demand among data scientists, researchers, and analysts. They help to efficiently collect, store, and process big data, deploy it in the workspace, and use it to train ML models. Databricks drives technology innovation by providing a single platform for exploring, analyzing, and applying data across industries.

Are you using Facebook Lead Ads? Then you will surely appreciate our service. The SaveMyLeads online connector is a simple and affordable tool that anyone can use to set up integrations for Facebook. Please note that you do not need to code or learn special technologies. Just register on our website and create the necessary integration through the web interface. Connect your advertising account with various services and applications. Integrations are configured in just 5-10 minutes, and in the long run they will save you an impressive amount of time.