Generative AI technologies continue to actively develop in 2024, mastering new tasks and areas of activity. One of them is video production automation. It is offered to users by the Gen-2 neural network from Runway Research. Our article will allow you to get to know this AI model better. You will learn what it is, as well as its features, applications, limitations, and impact on the AI industry.

Creation and Development of Runway Research

The American startup company Runway Research (today – Runway AI, Inc.) is known for its generative AI models Gen-1 and Gen-2. Its products generate AI videos, images, and other digital content using artificial intelligence and machine learning (ML) technologies. In addition, Runway is one of the developers of the popular text-to-image neural network Stable Diffusion.

The startup was created in 2018 in New York. Its founders included Cristóbal Valenzuela, Alejandro Matamala, and Anastasis Germanidis. They met at the Interactive Telecommunications Program (a 2-year engineering and creative program for New York University graduates). The co-founders explored the possibilities of using ML models to create images and videos in the creative industries. Shortly after completing the course, they launched Runway Research. That same year, the startup began working on a new machine learning platform. It was intended for the deployment of large-scale open-source models in the field of multimedia applications. The company attracted $2 million in investments for its first project. After that, it focused on developing AI and ML-based self-learning tools for video editing and visual effects.

In 2020, Runway received another $8.5 million in a Series A investment round. At the same time, a large-scale study called “High-Resolution Image Synthesis with Latent Diffusion Models” was published, prepared by Runway Research together with the CompVis Group. The document became the basis for the later released AI models: Latent Diffusion (December 2021) and Stable Diffusion (August 2022). In December 2021, Runway Research raised $35 million in a Series B funding round. A year later, it raised another $50 million in a Series C round.

In March 2023, the company released the first version of the Gen-1 multimodal AI model for automated video generation and editing (video-to-video). In June 2023, it introduced the second version of the Gen-2 generative neural network with advanced capabilities (text-/image-/video-to-video). After the successful launch of the flagship product, a number of large investors (including Google, Nvidia, and Salesforce) invested $141 million in the startup. This increased its estimated value to $1.5 billion. Such success could not go unnoticed. TIME magazine praised Runway Research by adding it to the list of the 100 most influential companies in the world.

Overview of the Gen-2 AI Model

Gen-2 has more powerful and versatile functionality compared to the first version of the neural network. First, it generates realistic videos from scratch. Secondly, it can modify ready-made videos, guided by a brief description of the scene (prompt) or an image/video loaded as an example. In addition, it allows you to combine text and graphic prompts.

The platform supports 8 operating modes:

- Text to Video;

- Text + Image to Video;

- Image to Video;

- Stylization;

- Storyboard;

- Mask;

- Render;

- Customization.

The Stylization mode gives you the ability to change the video style according to your query or image. The Storyboard mode turns mockups into stylized animated renders. The Mask, Render, and Customization modes let you highlight and modify individual objects in your video using text prompts.

Runway Gen 2 advanced settings provide a more precise adaptation of generated content to user requests. For example, you can save the number of starting points (seeds) for future generations. By entering the same number of seeds for different queries, you will receive videos that are similar in style. The exact combination of seed and request will allow you to create identical videos. By using this feature, you can generate several short videos in the same style and then combine them into one story. Other useful settings include scaling (which increases resolution) and interpolation (which smoothes out frames).

The system recognizes copyrighted images and rejects them. Therefore, it will not be possible to generate videos based on frames from films, TV series, or other commercial content. However, the use of photographs or stock images is permitted.

Terms of Use

You can use the innovative service from Runway Research through the web interface and mobile application (iOS). All generated videos, seeds, and other content are saved in the cloud. This ensures access to them on any device.

All paid account owners have the opportunity to create an unlimited number of projects. For free users, there is a limit of 3 projects. The platform allows the use of generated content for commercial purposes. Registration on the Runway's new Gen-2 AI model website is open to users from all over the world. To create an account, you must provide an email or log in using Google or Apple ID.

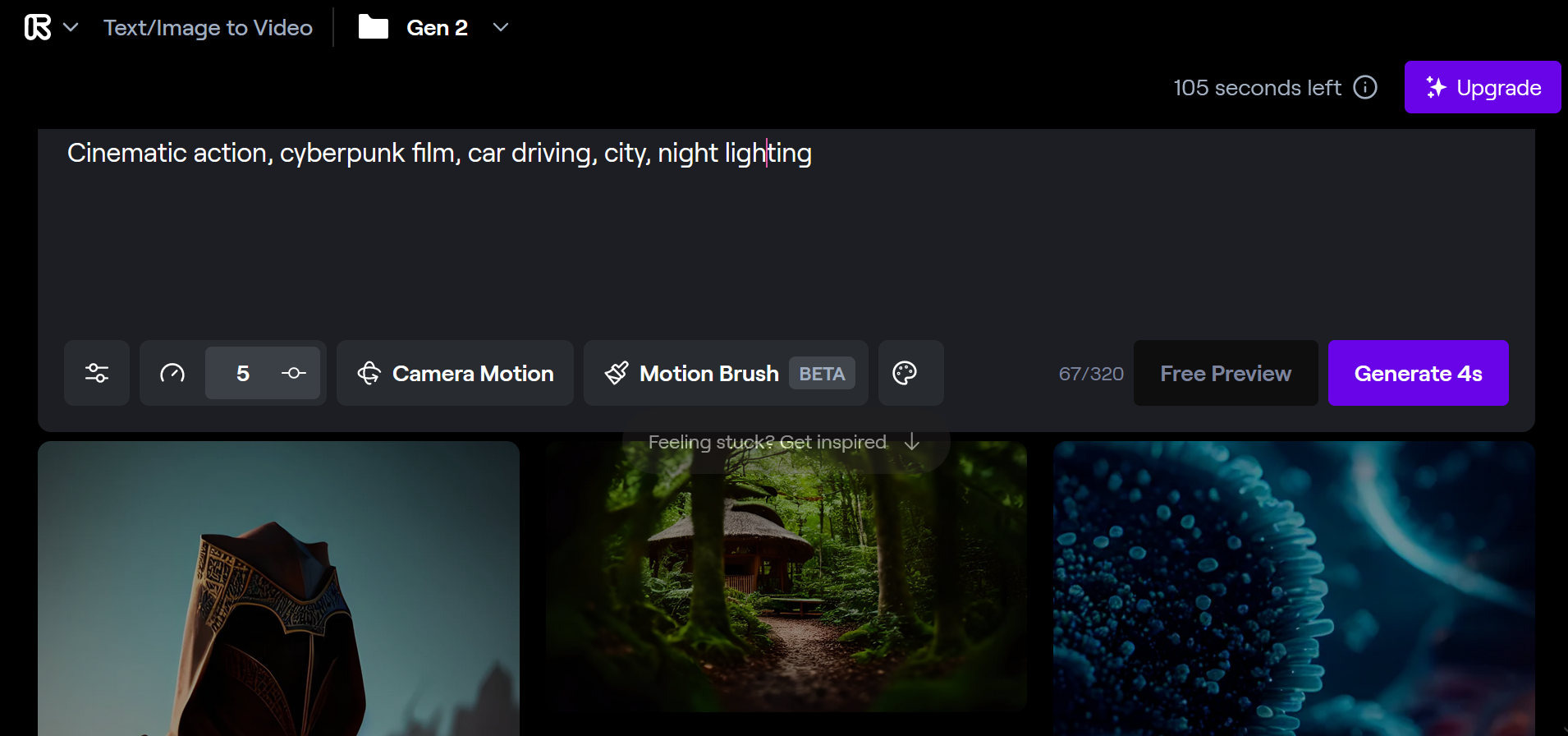

How to Generate Video

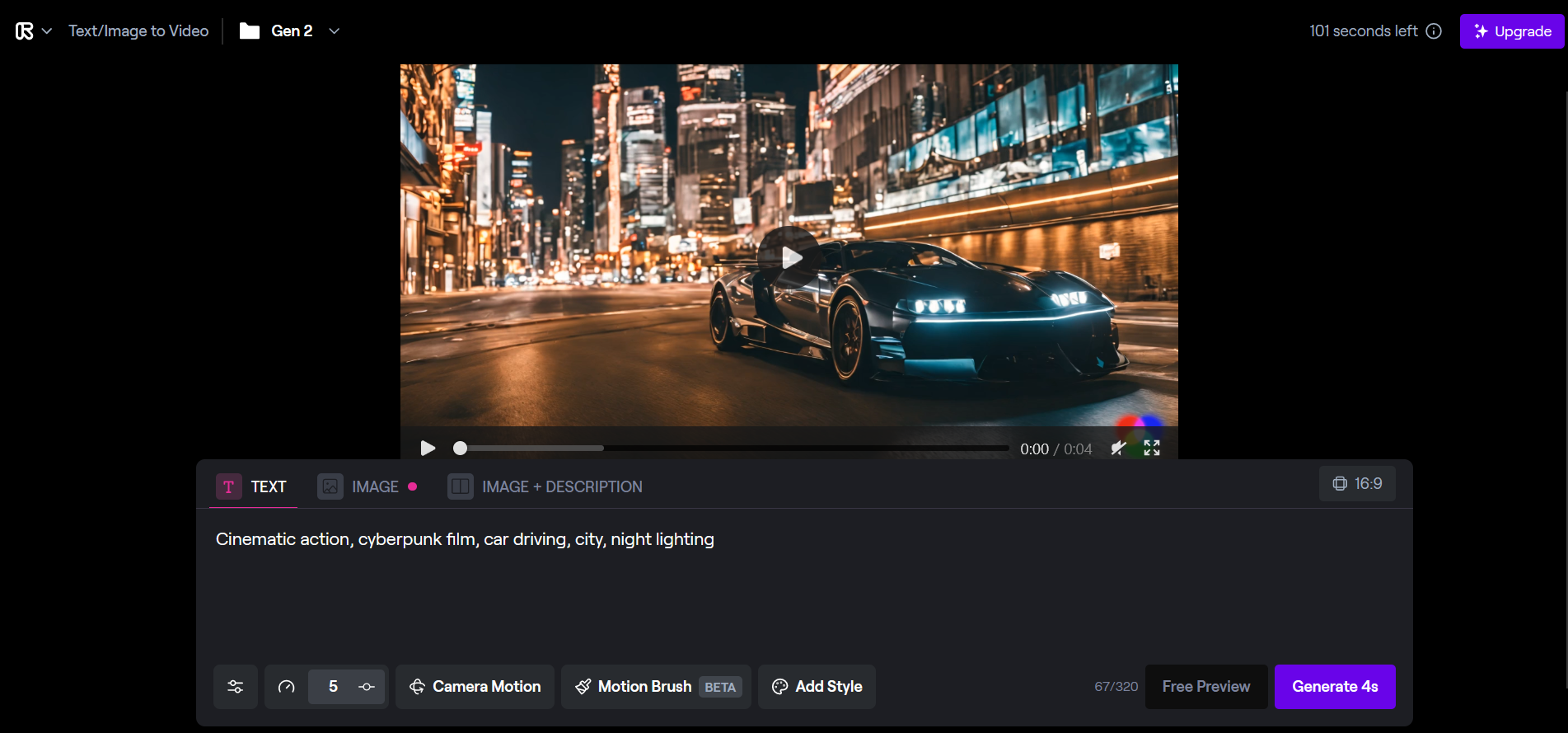

In a request to Runway Gen-2 AI, you can specify the following parameters for the generated video:

- style (cinematic action, animation, black and white, etc.);

- genre (sci-fi film, cyberpunk film, horror film, etc.);

- character actions (walking, running, talking, swimming, jumping, etc.);

- frame (wide angle, medium shot, close-up shot, extreme close-up shot, long shot);

- environment (beach, city, New York, the moon, volcano, etc.);

- lighting (sunset, sunrise, day, night, horror film lighting, sci-fi lighting);

- object (a woman with red hair, a man in a blue suit, a dog, a mountain, a boat, etc.).

After selecting the desired parameters, click the “Generate” button.

The finished video will be automatically saved in the service’s cloud library, from where you can download it to your computer. If necessary, you can make changes to the created video. Gen-2 provides 30+ AI editing tools: Green Screen for background removal, timeline editor, subtitle and LUT creation, transcript export, and many others.

Advice: To increase the accuracy of text queries, experienced users recommend composing them using the formula: style – genre – frame – object – action – environment – lighting. For example, "cinematic action, cyberpunk film, car driving, New York, sunrise."

Pricing

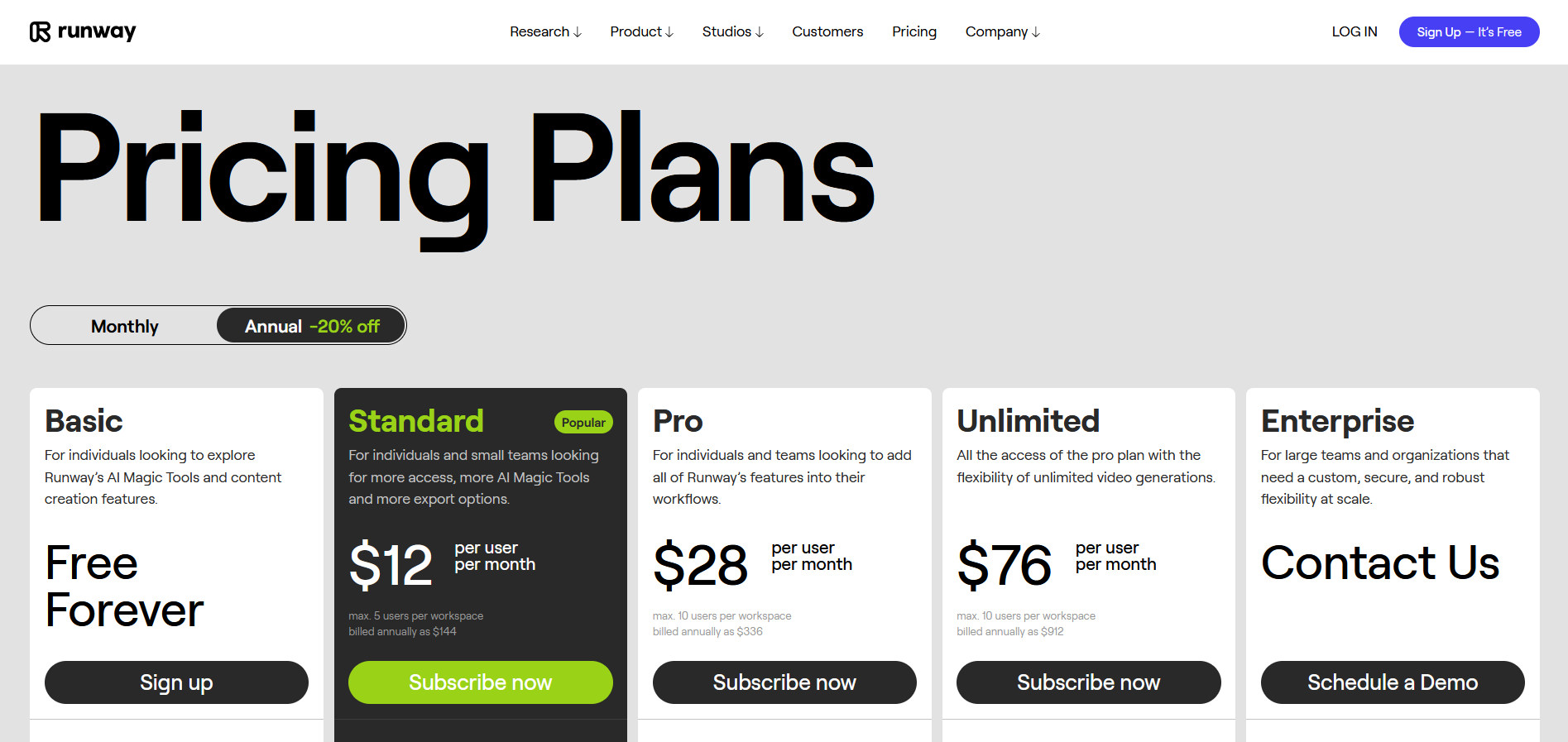

The service offers a range of 5 tariff plans:

- Free Forever (free). Provides individual users with 125 credits every month, which is enough to generate 25 seconds of video. With this plan, you can add up to 3 editors, store up to 5 GB of data in the cloud, and download videos in 720p.

- Standard ($12 per user, up to 5 users, 625 credits per month). This plan allows you to generate videos up to 125 seconds long, add up to 5 editors, store up to 100 GB of data in the cloud, and download 4K videos and 2K images.

- Pro ($28 per user, up to 10 users, 2250 credits per month). With the Pro plan, you can generate videos up to 450 seconds long, add up to 10 editors, store up to 500 GB of data in the cloud, and download 4K videos and 2K images + PNG & ProRes.

- Unlimited ($76 per user, up to 10 users). This plan supports all the features of the Pro plan and has no restrictions on video generation.

- Enterprise (cost and content are calculated individually). This plan offers the ability to personalize the model, increased security, adaptation to the needs of the company, integration with internal tools, and priority technical support.

The price is indicated with the condition of making payment for the year upfront. If you choose the monthly payment mode, the price of each tariff plan will be higher.

Application and Utility of the Gen-2 AI Model

Gen 2 Runway is endowed with the ability to synthesize an unlimited number of realistic videos of different genres and styles in high quality. This provides ample opportunities for its application in many creative fields. A neural network is a powerful tool for both professional video makers and users without any experience in video production. It saves them from spending hours on editing and rendering, generating any video in seconds.

Gen-2 is suitable for individuals, teams, studios, and companies of all types, sizes, and industries. The Runway Research product is especially in demand in the following areas:

- Marketing and advertising. With the help of a neural network, marketers and advertisers can create the video content they need in a couple of clicks. They no longer need to spend exorbitant budgets on video maker services.

- Education. The platform is capable of visualizing complex concepts by generating educational videos that are visual and engaging.

- Video blogging. The AI model provides bloggers with an unlimited source of high-quality video content to engage their audience.

The built-in Footage Packs library expands the application possibilities of Runway AI Gen 2. Using ready-made templates with original visual effects, you can easily and quickly create videos of your chosen style and theme. Let us give some examples of them:

- 1980s Sci-Fi Footage Pack (15 Videos): Retro-futuristic 1980s vibe.

- Plant Life Footage Pack (18 Videos): The beauty of plants with exceptional detail.

- Oceans Footage Pack (15 Videos): The mysterious depths of the underwater world.

- Mountains Footage Pack (17 Videos): The noble grandeur of the mountain ranges.

- Epic Explosions Footage Pack (21 Videos): Captivating scenes of super-powerful explosions.

- Animated Nature Footage Pack (17 Videos): Dynamic life of wild nature.

The Impact of Gen-2 on the AI Industry

Co-founder and CEO Cristóbal Valenzuela compares the release of Gen-2 by Runway to the invention of the first video camera. In his opinion, the new technology will forever change storytelling and will lead to the emergence of AI-generated feature films in the near future.

The launch of Gen-2 marked significant progress not only in video making but also in the field of generative artificial intelligence. In fact, this neural network is the most functional and productive among all currently existing AI technologies for video generation. According to recent studies, it is highly popular, successfully competing with other image-to-image and video-to-video technologies. In particular, when comparing Gen-2 and Stable Diffusion, 73.53% of respondents preferred the first. Even more users (88.24%) chose Gen-2 over another well-known platform, Text2Live.

However, some professional video makers consider Runway AI Gen 2 more of a toy for the curious than a truly useful tool. Their skepticism is caused by the unpredictability of the AI-generated result and the impossibility of manual modification. From their point of view, this technology is unlikely to put directors, animators, and computer graphics developers out of work. At least today.

Disadvantages and Limitations

Runway Gen-2 isn't perfect. Like any other neural network, it has its drawbacks. This does not mean that the model is bad. It's just that its creators still have some work to do.

The weaknesses of the service include:

- Frame frequency. The videos generated by the neural network have a fairly low frame rate. In some places, they look like a slideshow.

- Video quality. The AI model often creates unclear or grainy videos and does not draw characters’ fingers and eyes well. It can add unnecessary objects or distort them.

- Understanding queries. Gen-2 Runway does not always understand complex queries. Sometimes it takes into account only some descriptors in the description, ignoring the rest.

- Incoming data. The quality and volume of incoming data are significant limitations of the capabilities of this model. For example, a small number of templates with animation can make it difficult to create such content.

Conclusion

Gen 2 by Runway quickly won audience recognition and confidently occupied its niche among generative AI tools. In the field of video making, this platform is considered one of the most promising today, as it relieves users of routine tasks of video processing and rendering. The creators of the neural network call it a revolutionary invention that can radically change the modern film and video industry. However, not all experts share their point of view, noting some limitations in the functionality of the current version of this AI model. However, if developers eliminate them, it is quite possible that the video production industry will indeed undergo a significant transformation.

You probably know that the speed of leads processing directly affects the conversion and customer loyalty. Do you want to receive real-time information about new orders from Facebook and Instagram in order to respond to them as quickly as possible? Use the SaveMyLeads online connector. Link your Facebook advertising account to the messenger so that employees receive notifications about new leads. Create an integration with the SMS service so that a welcome message is sent to each new customer. Adding leads to a CRM system, contacts to mailing lists, tasks to project management programs – all this and much more can be automated using SaveMyLeads. Set up integrations, get rid of routine operations and focus on the really important tasks.