Training and deploying AI models requires significant computing resources, which are not available to every development team. Until recently, this limited the adoption of AI technologies, which were created and used only by large companies. With the advent of cloud services for developing and maintaining AI models, this situation has changed dramatically. In this article, we'll discuss one of the most well-known of these. Learn about NVIDIA NIM, its tools, its applications, and its importance for the AI industry.

Introduction to NVIDIA NIM

NVIDIA NIM (NVIDIA Inference Microservices) is a suite of microservices provided by the NVIDIA AI Enterprise platform. This solution comprises a range of tools and technologies used to deploy AI inference systems in data centers, cloud storage, and workstations.

The June 2024 release of the platform opened vast new opportunities for AI software developers. According to NVIDIA representatives, the introduction of NIM has reduced development time for chatbots, AI assistants, and similar services from weeks to minutes.

NIM microservices support various types of ML models. With their help, companies can significantly improve the performance of their computing infrastructure when working on AI projects, achieving more efficient results in a shorter timeframe.

Source: nvidia.com

NIM offers developers over 40 pre-installed models from NVIDIA and its community. These include Databricks DBRX, Google Gemma, Meta Llama 3, Microsoft Phi-3, Mistral Large, Mixtral 8x22B, and Snowflake Arctic. These models can be accessed as endpoints on the NVIDIA AI Enterprise cloud platform.

NVIDIA AI Enterprise NIM tools have been successfully integrated into many well-known AI/ML platforms' technology stacks, including Amazon SageMaker, Microsoft Azure AI, LangChain, DataRobot, Dataiku, Replicate, Saturn Cloud, and others. Its users also include global corporations such as Foxconn, Pegatron, Amdocs, Lowe's, ServiceNow, and Siemens.

Key Features and Architecture

The NVIDIA NIM architecture consists of several layers: API, server, runtime, and model engine. The system has a modular structure. Each service is built on a Docker container, simplifying and accelerating deployment across different platforms and operating systems.

Each container can be quickly downloaded from the NVIDIA Docker registry on NGC (https://catalog.ngc.nvidia.com). When using native NVIDIA models, the platform pre-generates their engines and includes industry-standard APIs with the Triton Inference Server.

NVIDIA NIM microservices have the following characteristics:

- Offer ready-made ML models for inference. These include models for generating text, images, and video; solutions for computer vision, speech recognition, and speech-to-text conversion; multimodal LLMs; and others.

- Enable model deployment with a single command. Also support orchestration and autoscaling using Kubernetes containers in any suitable environment.

- Grant access to optimized inference engines using Triton Inference Server, TensorRT, TensorRT-LLM, and PyTorch technologies.

- Provide built-in observability features, including Prometheus metrics, monitoring, and health checks, which can be integrated into enterprise data management workflows.

- Natively integrated with the NVIDIA AI Enterprise cloud platform. This system provides a wide range of tools, libraries, and frameworks for developing, deploying, and scaling AI applications.

Use Cases Across Industries

NVIDIA's suite of microservices is used across a wide range of applications, from customer service to data analytics and computer vision. They simplify the implementation and scaling of AI solutions based on LLM and specialized models. The most in-demand NVIDIA NIM use cases in 2025 include chatbots, RAG applications, speech and document recognition, and computer vision tasks.

Multifunctional AI Chatbots and Virtual Assistants

NVIDIA microservices suites are actively used to deploy LLM in interactive virtual assistants, support bots, chat interfaces, and other AI-powered programs. This enables the creation of scalable solutions that operate in real time and deliver a highly personalized user experience.

RAG Applications

NVIDIA NIM tools are often used in the development of AI applications with search and retrieval augmented generation (RAG) pipelines. This technology is particularly in demand for building AI bots for knowledge bases or semantic search systems. RAG pipelines combine retrieval-augmented data with LLMs to provide accurate, context-aware responses.

Speech Recognition

The NVIDIA model serving platform provides a specialized speech recognition model called FastConformer. It is capable of transcribing speech in real time (sub-second latency) with high accuracy and detail. This makes NIM highly sought after for developing voice AI applications. These include smart assistants, customer service chatbots, real-time transcription tools, and more.

Document Recognition and Analysis

NVIDIA microservices are used to develop software solutions capable of extracting structured information from documentation. They support working with scanned forms, invoices, handwritten notes, and more.

The suite's packaged models (such as PaddleOCR) are designed for end-to-end document analysis without the need for external OCR tools. Deploying them in containerized microservices provides API access for converting raw documents into structured JSON data.

Computer Vision

NIM's high-speed batch processing and data inference capabilities demonstrate excellent performance in computer vision tasks. These include defect detection, object recognition, and product classification. EfficientNet and FastViT models help solve computer vision problems. They are optimized to ensure high-quality predictions with low latency, even when processing large volumes of data.

Data and Code Analysis

NIM tools are frequently deployed by banks and financial institutions. They are used to deploy ML models trained to analyze transactions in real time to detect potential fraud. NVIDIA microservices are also in demand for automated code analysis and verification. They identify potential errors and provide recommendations for improvement.

Deployment and Integration

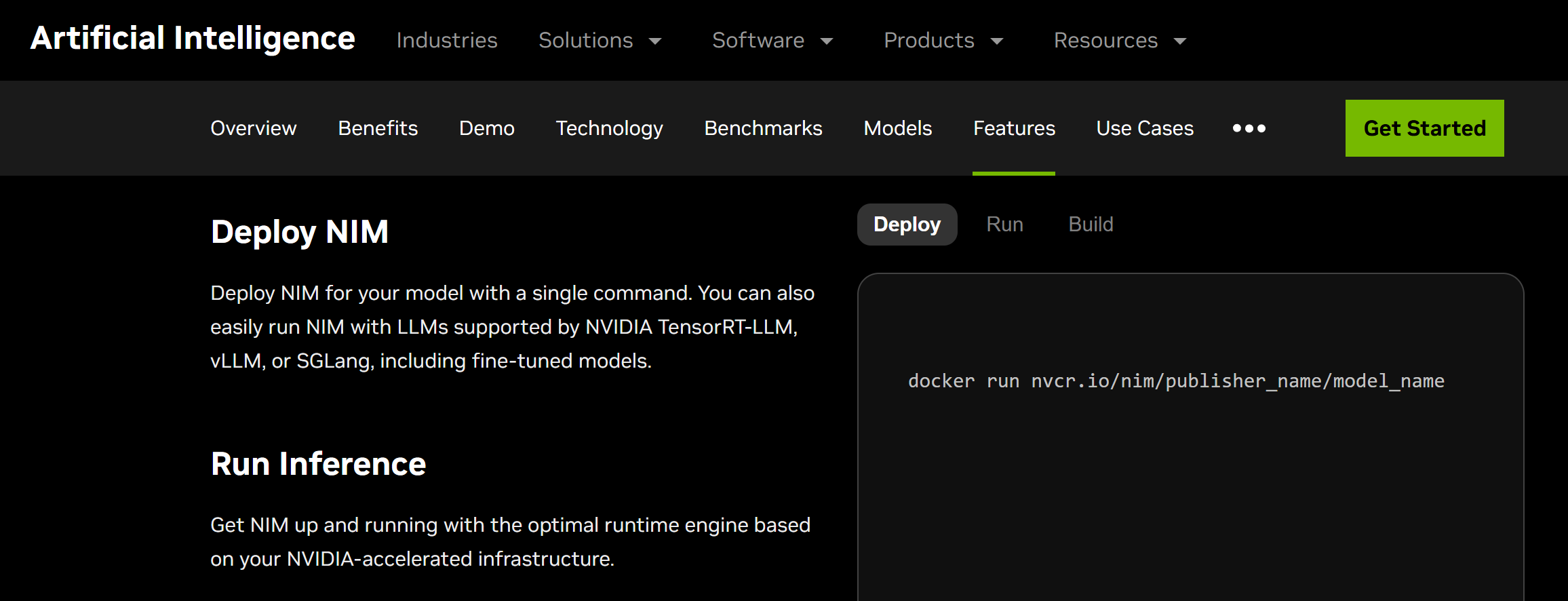

Source: nvidia.com

Now that you've familiarized yourself with the specifics and capabilities of microservices, it's time to learn how to use NVIDIA NIM to deploy ML models. This process is simple and straightforward, even for novice developers.

Brief step-by-step algorithm of actions:

- Go to the directory https://build.nvidia.com/explore/discover and select the model you need.

- Generate an API key to access the model.

- Log in to NVIDIA Container Registry to pull the container image.

- Select your desired deployment environment (cloud, on-premises, edge).

- Export the image to the deployment environment and specify the path to cache the downloaded model.

- Run the Docker container.

- Once the application is launched, it will display a list of models available for output.

- Send the system a command to infer the model. Deployment can be performed either through the Kubernetes manifest or the command line.

Kubernetes

Kubernetes is an open-source platform for automatically deploying, scaling, and managing containerized applications. Integration with it is one of the most popular NVIDIA NIM deployment methods.

Integration with Kubernetes allows developers to quickly and easily run ML models with various cluster, GPU, and storage configurations. It enables the use of nodes from this open-source platform for horizontal service scaling and the monitoring of key metrics from NIM.

Triton Inference Server

Integration with Triton Inference Server, a core tool in the NIM suite. This open-source platform is used to optimize data inference using ML models in the cloud, data centers, and on edge and embedded devices.

With Triton Inference Server, you can deploy any LLM from various frameworks, including TensorRT, PyTorch, ONNX, OpenVINO, RAPIDS FIL, and others. It improves performance for many query types, including real-time queries, batch queries, ensemble queries, and streaming audio/video.

Future Outlook and Benefits

NVIDIA's cloud AI deployment platform offers developers a number of significant benefits:

- Simplified deployment. NIM simplifies and accelerates the deployment of AI models. They are automatically containerized and optimized for running on NVIDIA infrastructure. This eliminates the need for specialists to manually configure and debug each LLM.

- Flexible scalability. The platform automates the scaling of model deployment processes across various environments, including on-premises, cloud, and edge environments. It enables faster and more efficient adaptation of AI applications to current tasks and workloads.

- Advanced management and monitoring. The platform offers a comprehensive set of tools for monitoring resource/network status and model performance. This allows for real-time monitoring of AI applications, visualization of key parameters, and flexible configuration management.

Experts estimate that the market capitalization of AI services and applications will reach a record $243.7 billion by 2025. This rapid growth in the AI industry is driven by the growing potential for its widespread application across many fields and industries.

The launch of the NVIDIA NIM platform marks a new milestone in the adoption of AI technologies. Its solutions for deploying AI models significantly accelerate and simplify the development and launch of innovative IT products.

The platform's capabilities are likely to expand significantly in the future, driven by the expansion of the available models, improved infrastructure performance, and the addition of new tools. Teams and companies will be able to use NIM microservices to develop even more complex applications, including autonomous systems and advanced data analytics modules.

Are you using Facebook Lead Ads? Then you will surely appreciate our service. The SaveMyLeads online connector is a simple and affordable tool that anyone can use to set up integrations for Facebook. Please note that you do not need to code or learn special technologies. Just register on our website and create the necessary integration through the web interface. Connect your advertising account with various services and applications. Integrations are configured in just 5-10 minutes, and in the long run they will save you an impressive amount of time.